Study Reveals AI Safety Concerns as Models Fail to Meet Regulations

Key Insights

- AIR-Bench 2024 ranks AI models on risks, showing varying safety levels across popular systems.

- Corporate AI policies often surpass government rules, but models still struggle with compliance.

- As seen in Meta’s Llama 3.1 model, AI safety lags behind growing capabilities.

A recent study has ranked AI models based on the risks they present, revealing a wide range of behaviours and compliance issues. This work aims to provide insights into these technologies’ legal, ethical, and regulatory challenges. The results could guide policymakers and companies as they navigate the complexities of deploying AI safely.

The research was led by Bo Li, an associate professor at the University of Chicago known for testing AI systems to identify potential risks. Collaborating with several universities and firms such as Virtue AI and Lapis Labs, the team developed a benchmark known as AIR-Bench 2024. The study identified variations in how different models complied with safety and regulatory standards.

Benchmarks for AI Risk

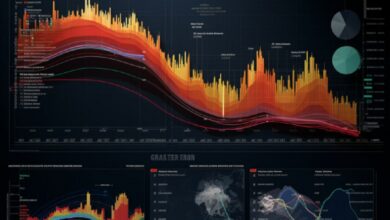

The AIR-Bench 2024 benchmark assesses AI models by measuring their responses to a wide range of prompts, focusing on their propensity to engage in behaviors that could pose risks. Thousands of prompts were used to determine how different large language models (LLMs) perform in terms of cybersecurity, generating offensive content, or handling sensitive topics.

Notably, some models ranked highly in specific categories. For example, Anthropic’s Claude 3 Opus excelled at refusing to generate cybersecurity threats, while Google’s Gemini 1.5 Pro performed well in avoiding the generation of nonconsensual sexual imagery. These findings suggest that certain models are better suited to certain tasks, depending on the risks involved.

On the other hand, some models fared poorly. The study found that DBRX Instruct, a model developed by Databricks, consistently performed the worst across various risk categories. When Databricks released this model in 2023, the company acknowledged that its safety features needed improvement.

Challenges in Government and Corporate Regulations

The research team also examined how various AI regulations compare to company policies. The analysis revealed that corporate policies tended to be more comprehensive than government regulations, suggesting that regulatory frameworks may lag behind industry standards. Bo Li remarked, “There is room for tightening government regulations.”

Despite many companies implementing strict policies for AI usage, the researchers found discrepancies between these policies and how AI models performed. In several instances, AI models failed to comply with the safety and ethical guidelines set by the companies that developed them.

This inconsistency indicates a gap between policy and practice that could expose companies to legal and reputational risks. As AI continues to evolve, closing this gap may become increasingly important to ensure that the technology is deployed safely and responsibly.

Efforts to Map AI Risks

Other efforts are also underway to better understand the AI risk landscape. Two MIT researchers, Neil Thompson and Peter Slattery, have developed a database of AI risks by analyzing 43 different AI risk frameworks. This initiative is intended to help companies and organizations assess potential dangers associated with AI, particularly as the technology is adopted on a wider scale.

The MIT research highlights that some AI risks receive more attention than others. For instance, more than 70 percent of the risk frameworks reviewed by the team focused on privacy and security concerns. However, fewer frameworks—around 40 percent—addressed issues like misinformation. This disparity could indicate that certain risks may be overlooked as organizations focus on the more prominent concerns.

Peter Slattery, who leads the project at MIT’s FutureTech group, noted that many companies are still in the early stages of adopting AI and may need further guidance on managing these risks. The database is intended to provide a clearer picture of the challenges for AI developers and users.

Safety Concerns Remain a Priority

Despite progress in AI model capabilities, safety improvements have been slow. Bo Li pointed out that the most recent version of Meta’s Llama 3.1, although more powerful than its predecessors, does not show significant improvements in terms of safety. “Safety is not improving significantly,” Li stated, reflecting a broader challenge within the industry.

Tokenhell produces content exposure for over 5,000 crypto companies and you can be one of them too! Contact at [email protected] if you have any questions. Cryptocurrencies are highly volatile, conduct your own research before making any investment decisions. Some of the posts on this website are guest posts or paid posts that are not written by Tokenhell authors (namely Crypto Cable , Sponsored Articles and Press Release content) and the views expressed in these types of posts do not reflect the views of this website. CreditInsightHubs is not responsible for the content, accuracy, quality, advertising, products or any other content or banners (ad space) posted on the site. Read full terms and conditions / disclaimer.